Algorithm Design 12

Chapter 9 Randomized Algorithm

9.3 Hashing

universal hashing function

Intuition : want the function looks "random"

Def [ universal hashing function ] A family \(\mathcal H\) of functions \(h:U\to\{0,\cdots,n-1\}\) is universal if \(\forall u,v\in U,u\neq v\) , \[ \Pr\{h\gets \mathcal H:h(u)=h(v)\}\le \frac{1}{n} \]

Design : Let \(p\approx n\) be a prime number ( \(n\) is the size of hash table , for simplicity let \(p=n\) )

For all \(x\in U\) , write \(x\) in base-\(p\) : \(x=\sum_{i=0}^{r-1} x_ip^i\) , where \(x_i\in \{0,\cdots,p-1\}\) . \[ \mathcal H:=\left\{h_{\vec a}(x)=\left(\sum_{i=0}^{r-1}a_ix_i\right)\bmod p:\vec a=(a_0,\cdots,a_{r-1}),a_i\in \{0,\cdots,p-1\}\right\} \] Goal : prove \(\forall x,y\in U,x\neq y\) , \[ \Pr\{a_i\gets \{0,\cdots,p-1\}:h_{\vec a}(x)=h_{\vec a}(y)\}\le \frac{1}{n} \] Proof :

Suppose \(x=\sum_{i=0}^{r-1}x_ip^i\) , \(y=\sum_{i=0}^{r-1}y_ip^i\) , so there exists \(j\) such that \(x_j\neq y_j\) . \[ \begin{aligned} &\quad \Pr\left\{h_{\vec a}(x)=h_{\vec a}(y)\right\}\\ &=\Pr\left\{\sum_{i=0}^{r-1}a_ix_i\equiv \sum_{i=0}^{r-1}a_iy_i\pmod p\right\}\\ &=\Pr\left\{a_j(x_j-y_j)\equiv \sum_{i=0,i\neq j}^{r-1}a_i(y_i-x_i)\pmod p\right\}\\ &=\frac{1}{p} \end{aligned} \] This is because \(a_j\gets \{0,\cdots,p-1\}\) , and for all possible \(\sum_{i=0,i\neq j}^{r-1}a_i(y_i-x_i)\) , there exists exactly one \(a_j\) that \(a_j(x_j-y_j)\equiv \sum_{i=0,i\neq j}^{r-1}a_i(y_i-x_i)\pmod p\)

Perfect Hashing

Def : static dictionary with no collision , \(|U|=n\) , space \(\mathcal O(n)\) , query time \(\mathcal O(1)\) ( with \(h(x)\) oracle , performing \(h(x)\) costs \(\mathcal O(1)\) time )

static : only consider lookup operation , no insertion/deletion .

FKS Perfect Hashing [1984]

Intuition : \(2\)-level data structure

- Firstly sample \(h\gets\mathcal H\) , get a hash table , space \(\mathcal O(n)\)

- Secondly , if index \(i\) encounters collision (suppose the collision set is \(B_i=\{u\in U:h(u)=i\}\) ), then sample \(h_i\gets \mathcal H'\) and get a second-level hash table , space \(\mathcal O(|B_i|^2)\) . If collision still happens , then resample \(h_i\) , until no collision happens .

Collision Analysis

Claim : \(\mathcal H=\{h\}\) universal , \(h:[n]\to [m]\) . If \(m=\mathcal O(n^2)\) , then with probability \(\ge 0.9\) there is no collision .

Proof : \(U=\{x_1,\cdots,x_n\}\) , define random variable \(X_{i,j}=\begin{cases}1&h(x_i)=h(x_j)\\0&otherwise\end{cases}\) , define \(X=\sum_{1\le i<j\le n} X_{i,j}\) \[ E[X]=\sum_{i<j}E[X_{i,j}]=\sum_{i<j}\Pr\{h(x_i)=h(x_j)\}\le \frac{\binom{n}{2}}{M} \] By Markov's Inequality , \[ \Pr\{X\ge 1\}\le E[X]\le \frac{n(n-1)}{2M}\le \frac{n^2}{2M} \] Therefore , let \(M=5n^2\) , so \(\Pr\{X\ge 1\}\le \frac{1}{10}\) . \(\square\)

Space Analysis

Claim : \(E[\sum |B_i|^2]=\mathcal O(n)\)

Proof : Let \(Y_i=|B_i|\) , \(Y=\sum |B_i|^2\) , so in the first level , \[ X=\sum \binom{Y_i}{2}=\frac{1}{2}\left(\sum Y_i^2-\sum Y_i\right)=\frac{Y-n}{2} \] Similarly , \(E[X]\le \frac{\binom{n}{2}}{n}=\frac{n-1}{2}\) , so \(E[Y]=E[2X+n]\le 2n-1=\mathcal O(n)\) .

Balls-Bins Game

\(n\) bins , \(n\) balls , \(E[\text{max bins}]=\Theta(\frac{\log n}{\log\log n})\)

\(X_i=\#\text{ balls in bin }i\) , \(Y_{i,j}=\begin{cases}1&\text{ball }j\to \text{bin }i\\0&otherwise\end{cases}\) , so \(X_i=\sum Y_{i,j}\)

Let \(c=\Theta(\frac{\log n}{\log\log n})\) , so \(c=1+\delta\) , \(E[X_i]=1\) , so \[ \Pr\{X_i\ge c\}\le \left(\frac{e^{c-1}}{c^c}\right)\le \frac{1}{n^2} \]

Coupon Collector Problem

\(n\ln n\) balls , \(n\) bins , w.h.p. every bin is non-empty

Load Balancing Problem

\(kn\log n\) balls , \(n\) bins , load of every bin \(\approx (k-1,k+1)\log n\) ( \(k\) not too small )

Application : load balancing in network

Power of two choices

\(n\) bins , \(n\) balls

For every ball , choose \(2\) random bins , and place the ball in the bin with smaller load .

\(E[\text{max bins}]=\Theta(\log \log n)\)

(*) Cuckoo Hashing

worst case : \(\mathcal O(1)\) lookup , dynamic dictionary

Maintain \(2\) hash tables , \(h_1,h_2\gets \mathcal H\)

Insert(x)If \(h_1(x)\) in \(T_1\) is empty , insert \(x\) into \(T_1\)

If \(T_1(h_1(x))\) contains \(x'\) , then insert \(x'\) into \(T_2\) by \(h_2(x')\) , and insert \(x\) into \(T_1\) by \(h_1(x)\)

Following some procedure , until an empty slot is found .

If #iteration $$ threshold \(t\) , rehash all elements

If load \(\le 50\%\) , \(E[\texttt{insertion time}]=\mathcal O(1)\)

Lookup(x): Obviously at most look up \(2\) times .Delete(x): Use lookup then delete .

Application : Closest Pair Problem

using D&C , \(\mathcal O(n\log n)\)

using Hashing , \(\mathcal O(n)\) in expectation

Hash function computation modal :

- Insertion : \(\mathcal O(1)\) in expectation

- Deletion : \(\mathcal O(1)\) in expectation

- Lookup : \(\mathcal O(1)\) in expectation

Algorithm :

Order points randomly \(P_1,\cdots,P_n\) , let \(\delta=d(P_1,P_2)\)

When we process \(P_i\) , we will find whether \(\exists j<i\) s.t. \(d(P_j,P_i)<\delta\) .

If YES , we start a new phase with new \(\delta'=d(P_j,P_i)\)

In each phase , maintain a grid with edge length \(\frac{\delta}{2}\) , each cell contains \(\le 1\) points in \(\{P_1,\cdots,P_{i-1}\}\)

maintain all nonempty cells in a dictionary ( \(U\) : all cells )

Suppose we are processing \(P_i\) , we only need to look up \(25\) cells in the dictionary to find smaller distance .

Whenever we start a new phase , we reconstruct the whole dictionary .

Analysis :

\(X_i=\begin{cases}1&P_i\text{ causes a new phase}\\0&otherwise\end{cases}\) , running time \(T=\sum_{i=1}^n \left(X_i \mathcal O(i)+25\mathcal O(1)\right)\) .

Therefore , \(E[T]=\left(\sum_{i=1}^n \mathcal O(i)\Pr\{X_i=1\}\right)+\mathcal O(n)\) .

Claim : \(\Pr\{X_i=1\}\le \frac{2}{i}\) ( by the initial random permutation )

Proof : Suppose \((P_a,P_b)\) has the minimum distance among \(P_1,\cdots,P_i\) , so \(\Pr\{X_i=1\}=\Pr\{a=i\lor b=i\}\le \Pr\{a=i\}+\Pr\{b=i\}\) .

\(\Pr\{a=i\}=\Pr\{b=i\}=\frac{1}{i}\) , so \(\Pr\{X_i=1\}\le \frac{2}{i}\) . \(\square\)

9.4 Approximate Counting

Approximate Counting Problem

#P : counting problem \(\leftrightarrow\) NP

#P-complete

\(L\in \texttt{NP-Complete}\to L\in \texttt{\#P-Complete}\)

\(L\in \texttt{\#P-Complete}\not\to L\in \texttt{NP-Complete}\) : e.g. counting perfect matching

FPRAS : Fully-Poly Randomized Approximation Scheme

An algorithm in \(poly(n,\frac{1}{\epsilon})\) , \(\Pr\{(1-\epsilon)ANS\le SOL\le (1+\epsilon)ANS\}\ge 0.99\)

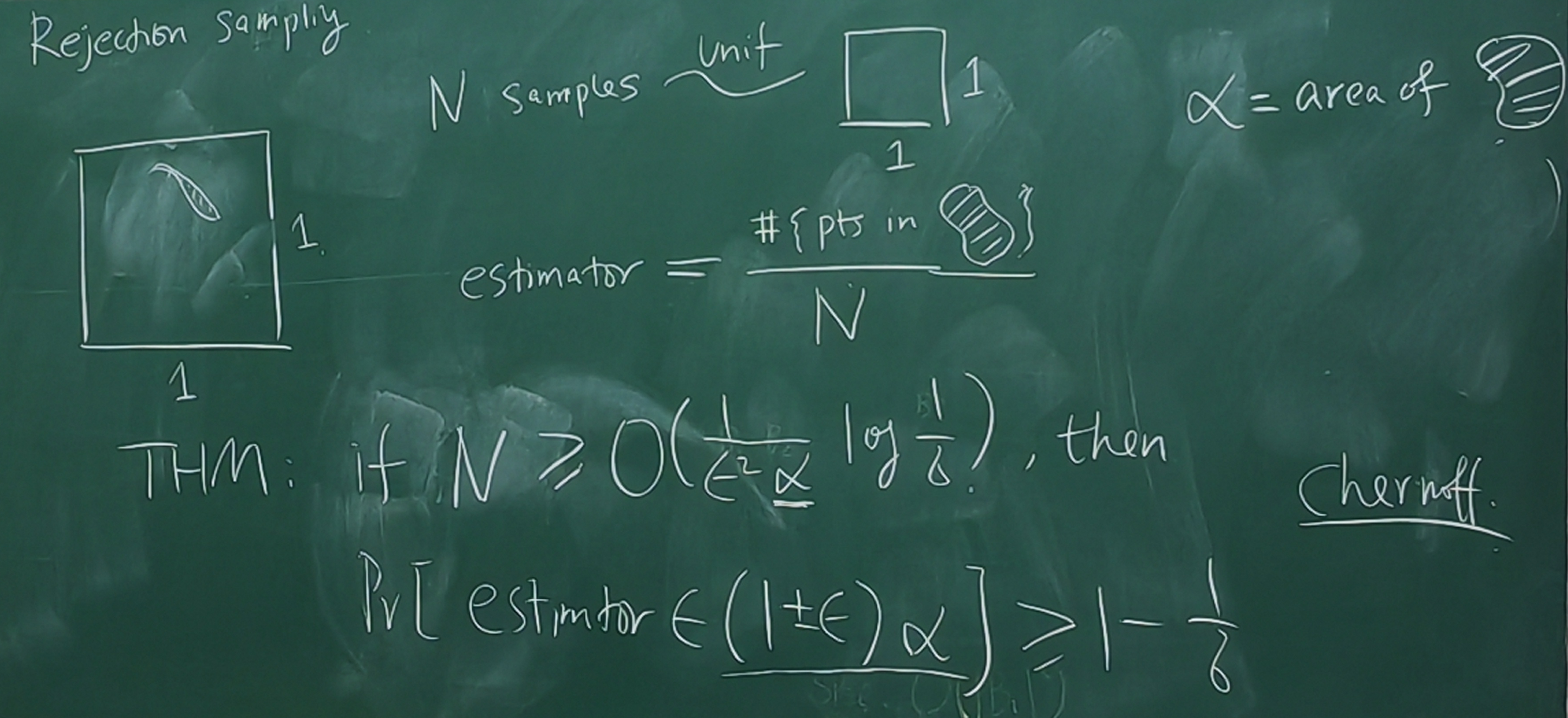

Scheme : Rejection Sampling

Example:

General : to estimate \(|G|\) , construct \(U\) s.t. \(G\subseteq U\)

- Can uniformly sample from \(U\)

- Membership Oracle : \(\forall x\in U\) , decide whether \(x\in G\) or not

- We know \(|U|\)